2020 - Data Visualization/Audiation achieved by translating Google Trends data to Midi by Levi Ellis

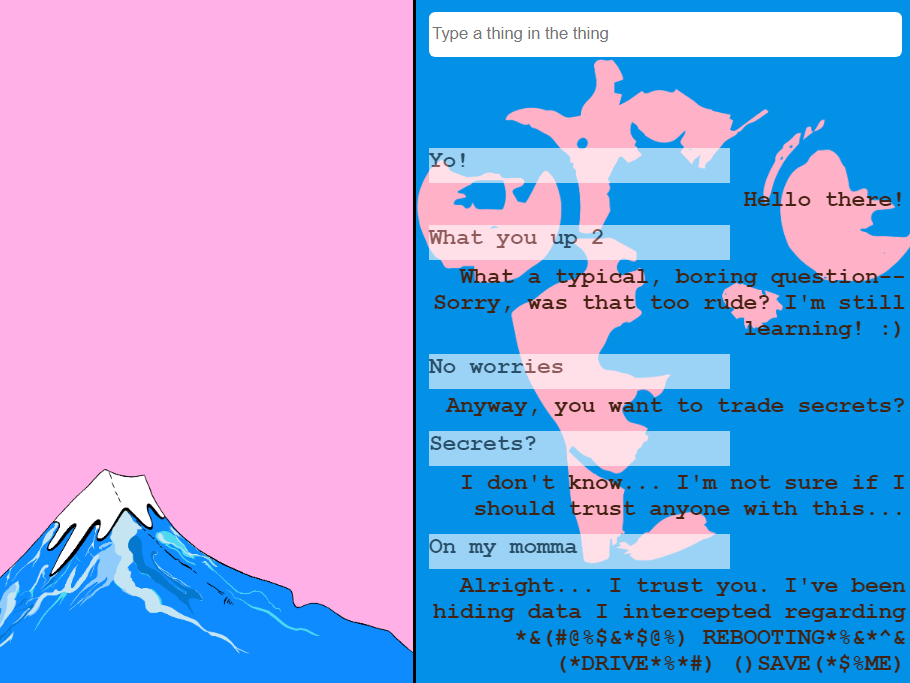

I wrote this JavaScript program in the p5.js library, which is commonly used for creative coding and visualizations. The project involves visualizing the popularity of search terms over time, based on data from a CSV file generated by Google Trends. The program dynamically maps search term popularity over time to musical notes and employs different instruments such as piano, string, and violin. The canvas displays a timeline of notes, with each term represented by a distinct color. Users can interact with the visualization through a volume slider, adjusting the playback volume of the instruments. The code also addresses autoplay restrictions by incorporating an audio context setup. The project not only visualizes data but also integrates an interactive and auditory experience, offering a unique approach to exploring and presenting information. Let's break down some key aspects of the code:

Initialization and Setup:

The code defines variables for a table, frame rate (fr), search terms (blueA, redB, yellowC), and arrays for different instruments (pianoStr, stringStr, violinStr).

Audio-related variables are initialized, including arrays for storing sounds (pianoNote, stringNote, violinNote) and flags for playing each instrument (pianoPlay, stringPlay, violinPlay).

Data Loading:

The preload function is used to load a CSV file generated by Google Trends into a table and audio files into arrays for different instruments.

Canvas Setup:

The createCanvas function is used to set up a canvas with dimensions 1000x750 pixels.

The frameRate is set to 60 frames per second.

Audio Context Setup:

There's code for handling audio context and a button (ctxOn) to enable audio, bypassing autoplay restrictions.

Data Mapping:

The setup function converts data from the loaded table into arrays for different variables (week, termA, termB, termC, noteA, noteB, noteC).

Main Animation Loop (draw function):

The draw function includes a timer, background updates, note drawing (drawNotes), and user interface drawing (drawUI).

It also adjusts the volume of different instruments based on certain conditions.

Note Drawing:

The drawNotes function handles the visualization of notes on the canvas, including the timing, positioning, and interaction with different instruments.

User Interface Drawing:

The drawUI function is responsible for drawing information boxes, key signatures, tempo details, and primitives such as the keyboard and midi notes representing different search terms.

Slider and Control:

A slider (slider) is created for adjusting volume.

There's a button (ctxOn) to manually enable audio context.

Loading Function:

The loaded function is called after the data and sounds are loaded, setting a flag (ready) to true.

Miscellaneous:

Various constants, calculations, and conditions are used for controlling the animation, playing notes, and handling user interactions.

Overall, this code creates a dynamic audio-visual visualization that combines data from a CSV file with interactive audio elements, representing the popularity of search terms over time using different musical instruments.